In today’s digital landscape, data compression has become an essential tool for managing the explosive growth of information, requiring careful consideration of multiple competing factors to achieve optimal results.

Every second, millions of users across the globe upload photos, stream videos, download applications, and transfer files. Behind these everyday actions lies a complex technological challenge: how to store and transmit vast amounts of data efficiently without compromising quality or speed. Data compression serves as the invisible engine powering our digital experiences, yet few people understand the intricate tradeoffs that make it work.

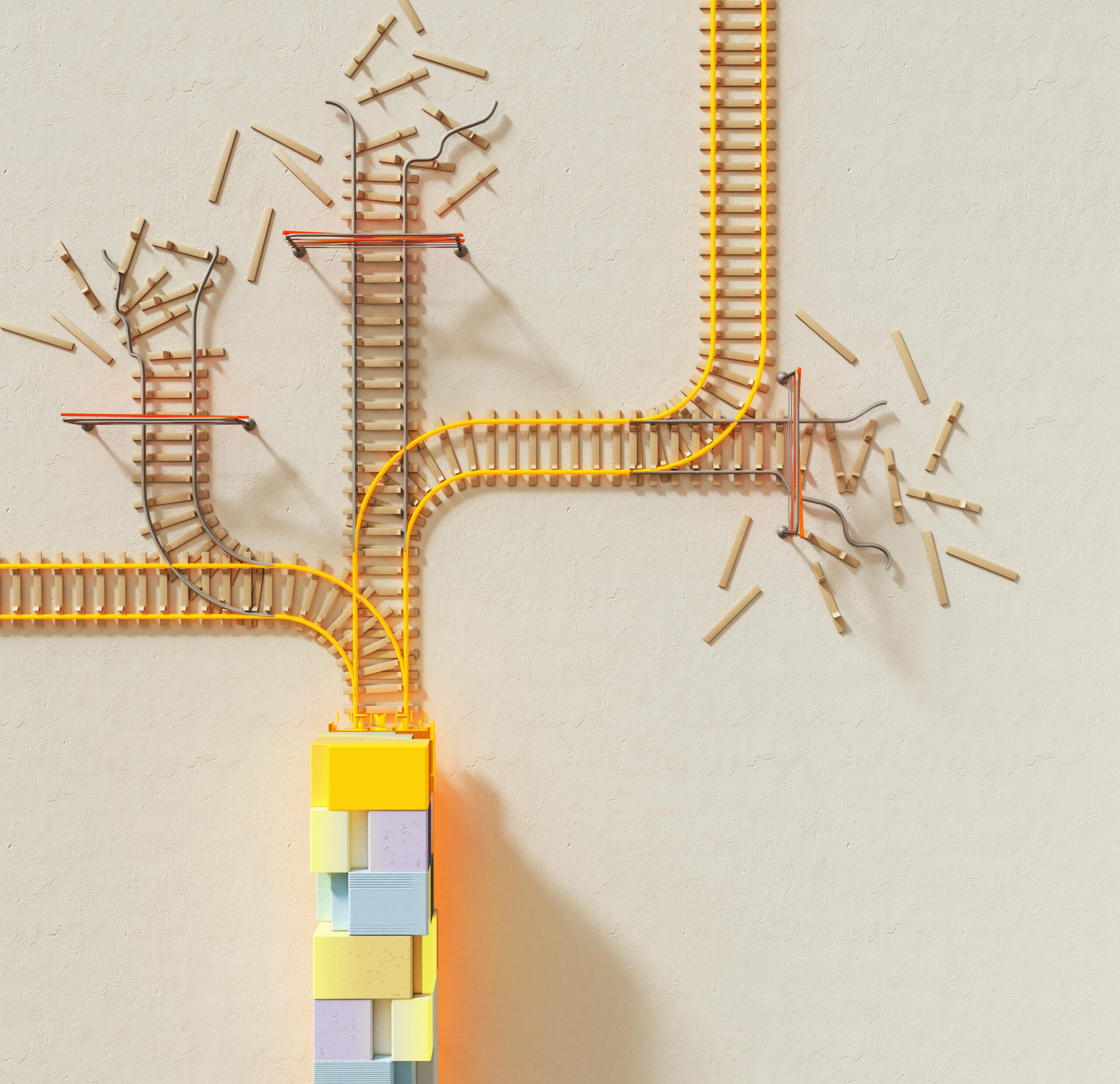

The art and science of data compression involves navigating a three-dimensional space where speed, efficiency, and quality constantly compete for priority. Understanding these tradeoffs isn’t just academic—it directly impacts everything from smartphone performance to cloud storage costs, streaming service quality, and enterprise data management strategies.

🔄 The Fundamental Triangle: Speed, Efficiency, and Quality

Data compression operates within an iron triangle where improving one dimension often means sacrificing another. Compression speed determines how quickly data can be compressed and decompressed. Efficiency measures how much the file size shrinks. Quality reflects how accurately the decompressed data matches the original.

High-speed compression algorithms like LZ4 can process data at several gigabytes per second but achieve relatively modest compression ratios. On the opposite end, LZMA and BZIP2 deliver exceptional compression efficiency while requiring significantly more processing time and computational resources. Lossy formats like JPEG and MP3 trade perfect reconstruction for dramatic size reductions, making quality itself a variable in the equation.

The challenge becomes more complex when we consider real-world applications. A video streaming service needs fast decompression for smooth playback, reasonable file sizes for bandwidth management, and sufficient quality to satisfy viewer expectations. Archive systems prioritize maximum compression efficiency over speed since files are compressed once but stored indefinitely. Real-time communication applications demand lightning-fast processing even if it means accepting larger file sizes.

Understanding Compression Ratios and Their Implications

Compression ratio represents the relationship between original and compressed file sizes, typically expressed as a percentage or ratio. A file compressed from 100MB to 50MB achieves a 2:1 ratio or 50% compression. However, this simple metric masks significant complexity in how different algorithms achieve their results.

Lossless compression algorithms guarantee perfect reconstruction, making them essential for text documents, executable files, and scientific data where every bit matters. These algorithms exploit patterns and redundancies in data structure. Run-length encoding compresses sequences of identical values. Dictionary-based methods like LZW identify repeating patterns and replace them with shorter references. Entropy encoding assigns shorter codes to frequently occurring symbols.

Lossy compression accepts controlled information loss to achieve dramatically higher compression ratios. Perceptual coding techniques leverage human sensory limitations—discarding visual details the eye cannot perceive or audio frequencies the ear cannot hear. This approach works brilliantly for multimedia content but remains unsuitable for data requiring absolute accuracy.

⚡ The Need for Speed: Real-Time Compression Challenges

Modern applications increasingly demand real-time compression capabilities that can keep pace with data generation rates. Video conferencing, live streaming, gaming, and IoT sensors generate continuous data streams requiring immediate compression without introducing perceptible latency.

Speed-optimized algorithms employ several strategies to maximize throughput. Parallel processing divides data into independent chunks that can be compressed simultaneously across multiple CPU cores. Simplified algorithmic approaches reduce computational complexity, trading compression ratio for processing speed. Hardware acceleration through specialized chips or GPU processing can dramatically boost performance for specific compression formats.

The streaming video industry provides an excellent case study in speed optimization. Modern codecs like H.264 and VP9 incorporate fast encoding modes that sacrifice some compression efficiency for real-time performance. Cloud gaming services compress and transmit video frames within milliseconds to maintain responsive gameplay. These applications demonstrate that speed often takes priority over achieving maximum compression ratios.

Asymmetric Compression: When Encoding and Decoding Speeds Differ

Many compression algorithms exhibit asymmetric performance characteristics, where compression is computationally expensive but decompression is relatively fast. This asymmetry creates opportunities for optimization in scenarios where data is compressed once but decompressed many times.

Software distribution exemplifies this principle. Developers can invest significant time compressing application packages to minimize download sizes and bandwidth costs. Users benefit from quick decompression during installation without noticing the compression time investment. Video files follow similar patterns—intensive encoding once, followed by countless efficient playback operations.

Understanding these asymmetric patterns helps optimize system architecture. Content delivery networks cache compressed versions of popular files, amortizing compression costs across millions of downloads. Database systems may compress archived records using slow but highly efficient algorithms since queries retrieving old data happen infrequently.

📊 Efficiency Optimization: Squeezing Every Byte

For applications where storage costs dominate or bandwidth is severely constrained, maximizing compression efficiency becomes paramount. Cloud storage providers, archival systems, and satellite communication networks often prioritize achieving the smallest possible file sizes regardless of processing time.

Advanced compression techniques push efficiency boundaries through sophisticated analysis and multi-pass processing. Context modeling builds statistical models of data patterns to improve prediction accuracy. Preprocessing transforms data into more compressible forms before applying standard algorithms. Dictionary optimization fine-tunes compression parameters for specific data types.

The choice of compression algorithm dramatically impacts efficiency outcomes. For text and structured data, algorithms like LZMA, BZIP2, and Zstandard offer superior compression ratios compared to faster alternatives. Specialized algorithms target specific data types—PNG for images with sharp edges, FLAC for audio requiring lossless quality, and 7-Zip for general file archives.

Data-Specific Compression Strategies

Generic compression algorithms work reasonably well across diverse data types, but specialized approaches leveraging domain knowledge achieve far better results. Understanding your data characteristics enables selection of optimal compression strategies.

Textual data benefits from dictionary-based compression that exploits word repetition and grammatical patterns. XML and JSON files with verbose tag structures achieve impressive compression through structural encoding. Log files containing timestamp patterns and repetitive formatting compress particularly well with adaptive algorithms that learn patterns dynamically.

Scientific and numeric data presents unique opportunities. Many measurements contain limited precision despite being stored in high-precision formats. Quantization reduces numeric precision to levels matching actual measurement accuracy. Delta encoding stores differences between consecutive values rather than absolute numbers, leveraging temporal correlation in sensor data streams.

Multimedia content demands format-specific approaches. JPEG and WebP for photographs, PNG for graphics with transparency, HEIF for mobile images offering improved efficiency. Audio compression splits between lossless formats like FLAC for archival and lossy formats like AAC and Opus for streaming. Video codecs continue evolving, with AV1 and VVC pushing efficiency boundaries for next-generation streaming services.

🎨 Quality Considerations: Preserving What Matters

Quality assessment in compression scenarios extends beyond simple bit-perfect reproduction. Perceptual quality measures how compressed data appears to human observers, often diverging significantly from mathematical similarity metrics. Applications must define acceptable quality thresholds based on use case requirements and user expectations.

Lossless compression maintains perfect fidelity, making quality a binary proposition—either the decompressed data exactly matches the original, or the compression has failed. This approach suits text documents, software code, financial records, medical images, and any data where accuracy is non-negotiable. The tradeoff lies entirely between speed and compression ratio.

Lossy compression introduces a quality spectrum requiring careful navigation. JPEG quality settings range from heavily compressed small files with visible artifacts to barely compressed large files indistinguishable from originals. Video bitrate controls balance file size against visual clarity. Audio compression manages similar tradeoffs between file size and perceived fidelity.

Measuring and Maintaining Perceptual Quality

Evaluating lossy compression quality requires sophisticated metrics beyond simple mathematical calculations. Peak Signal-to-Noise Ratio (PSNR) provides a baseline measure but correlates poorly with human perception. Structural Similarity Index (SSIM) better captures perceptual differences by analyzing image structure, luminance, and contrast changes.

Modern quality assessment employs perceptual models trained on human judgments. Video Multi-Method Assessment Fusion (VMAF) combines multiple quality metrics to predict viewer satisfaction accurately. Perceptual audio codecs use psychoacoustic models determining which frequency components can be discarded without audible quality loss. These sophisticated approaches enable aggressive compression while maintaining acceptable user experience.

Adaptive quality systems dynamically adjust compression parameters based on content characteristics and network conditions. Streaming services implement bitrate ladders offering multiple quality levels, automatically switching based on available bandwidth. Image delivery networks generate multiple compressed versions optimized for different device types and screen sizes. This adaptability ensures optimal experience across varying conditions.

🔧 Practical Implementation Strategies

Successfully implementing compression strategies requires aligning algorithm selection with specific use cases, infrastructure capabilities, and business objectives. A systematic approach helps navigate the complex decision landscape.

Begin by profiling your data characteristics and usage patterns. Analyze data types, file sizes, access frequencies, and performance requirements. Measure baseline storage costs, bandwidth consumption, and processing capabilities. This foundation enables informed tradeoff decisions based on actual needs rather than assumptions.

Benchmark multiple compression algorithms against representative data samples. Measure compression ratios, processing speeds, and resource consumption under realistic conditions. Consider both compression and decompression performance, especially for asymmetric usage patterns. Test quality outcomes for lossy formats using appropriate perceptual metrics.

Building Flexible Compression Pipelines

Modern applications benefit from flexible compression architectures supporting multiple algorithms and adaptive selection. Implementing compression as a pluggable component allows experimentation and optimization without extensive refactoring.

Design systems that can apply different compression strategies to different data classes. Archive cold storage using maximum efficiency algorithms, compress frequently accessed data with fast algorithms, and leave performance-critical data uncompressed. Tag compressed data with algorithm identifiers enabling transparent decompression regardless of which method was used.

Consider implementing tiered storage strategies where data migrates between compression schemes based on age and access patterns. Recently created data stays in fast-access storage with light compression. Aging data transitions to more aggressive compression as access frequency declines. Long-term archives employ maximum compression efficiency.

🌐 Future Trends in Compression Technology

Compression technology continues advancing rapidly, driven by exponentially growing data volumes and emerging application requirements. Machine learning approaches now enhance traditional algorithms, learning optimal compression strategies from data patterns. Neural compression models trained on specific content types achieve impressive results, though computational requirements currently limit practical deployment.

Hardware acceleration becomes increasingly important as specialized compression processors appear in modern CPUs, GPUs, and dedicated chips. These hardware implementations deliver order-of-magnitude performance improvements for supported formats, effectively eliminating speed-efficiency tradeoffs for specific use cases.

Emerging codecs like JPEG XL for images, AV1 for video, and Opus for audio demonstrate that efficiency improvements remain achievable through algorithmic innovation. These next-generation formats deliver 20-50% better compression than predecessors while maintaining similar computational requirements, proving the tradeoff space continues expanding.

Edge computing and IoT applications introduce new compression challenges requiring ultra-low latency processing within severe power and computational constraints. Lightweight algorithms optimized for embedded processors balance minimal resource consumption against reasonable compression performance. These specialized solutions demonstrate how compression continues adapting to emerging technological paradigms.

💡 Making Informed Tradeoff Decisions

Successfully mastering compression tradeoffs requires understanding that no single solution fits all scenarios. The optimal approach depends on specific requirements, constraints, and priorities that vary significantly across applications.

Define clear success metrics before selecting compression strategies. Prioritize factors based on business value—is reducing storage costs more important than minimizing processing overhead? Does user experience depend more on quality or loading speed? Can compression happen offline, or must it occur in real-time?

Remember that tradeoffs evolve over time as technology advances and business requirements change. Regularly reassess compression strategies against current capabilities and needs. Technologies that seemed impractical due to computational costs may become viable as hardware performance improves. New algorithms offer better tradeoff balances worth investigating.

Build systems with flexibility to adapt compression approaches without architectural overhauls. Abstraction layers, pluggable components, and comprehensive monitoring enable continuous optimization. Track compression ratios, processing times, quality metrics, and resource consumption to identify improvement opportunities and validate optimization efforts.

The digital age generates data at unprecedented rates, making effective compression strategies essential for managing costs, maintaining performance, and delivering quality experiences. By understanding the fundamental tradeoffs between speed, efficiency, and quality, and applying this knowledge to specific use cases, organizations can optimize their compression approaches to meet current needs while remaining adaptable to future challenges. The key lies not in finding a perfect solution, but in making informed decisions that align technical capabilities with business objectives, creating systems that balance competing priorities effectively in an ever-evolving digital landscape. 🚀

Toni Santos is a researcher and historical analyst specializing in the study of census methodologies, information transmission limits, record-keeping systems, and state capacity implications. Through an interdisciplinary and documentation-focused lens, Toni investigates how states have encoded population data, administrative knowledge, and governance into bureaucratic infrastructure — across eras, regimes, and institutional archives. His work is grounded in a fascination with records not only as documents, but as carriers of hidden meaning. From extinct enumeration practices to mythical registries and secret administrative codes, Toni uncovers the structural and symbolic tools through which states preserved their relationship with the informational unknown. With a background in administrative semiotics and bureaucratic history, Toni blends institutional analysis with archival research to reveal how censuses were used to shape identity, transmit memory, and encode state knowledge. As the creative mind behind Myronixo, Toni curates illustrated taxonomies, speculative census studies, and symbolic interpretations that revive the deep institutional ties between enumeration, governance, and forgotten statecraft. His work is a tribute to: The lost enumeration wisdom of Extinct Census Methodologies The guarded protocols of Information Transmission Limits The archival presence of Record-Keeping Systems The layered governance language of State Capacity Implications Whether you're a bureaucratic historian, institutional researcher, or curious gatherer of forgotten administrative wisdom, Toni invites you to explore the hidden roots of state knowledge — one ledger, one cipher, one archive at a time.